Artificial Intelligence at Cambridge University – Anh attends a talk by Prof Andrew Blake, Chairman of Samsung’s AI

Darwin College organises eight themed public lectures each year, beginning in the second term of every academic year. This year features the theme “Vision”. My personal tutor, Tony, introduced me to the lectures and they immediately aroused great interest in me as they were a wonderful opportunity to gather more knowledge about my field of study. I thought I might even discover what I want to specialise in later in life. Two lectures in this series discussed the two buzzing topics of Computer Science: Big Data and Artificial Intelligence (AI). Being an aspiring Computer Science student myself, I could not help but attending these and they were beyond my expectations.

Miss Sophie Hackford, a futurist and co-founder of 1715 Labs led the first talk of the two,” Vision of Future Technology”. In this lecture, she discussed the ever-increasingly important roles of data and AI in our daily lives as well as our relationships with robots.

Sophie argued that the world as seen by computers was becoming a dominant reality, rivalling the ordinary world as we see it. “The data about ourselves is as important almost in our choices and our behaviours as our physical body and human self are”, she proclaimed. Recent developments in technology allowed ordinary citizens to access an unparalleled amount of data, be it pictures of the entire Earth, or the number of customers going to a certain supermarket. The increasing resolution in cameras had also led to the rise in uses of drones and facial recognition technology, which unsurprisingly raised a plethora of questions about privacy and security. How can we make sure that ethics are upheld in the future? How can we prevent malicious entities from using these technologies to cause great harm? Beside capturing real-life data, sophisticated AI could also generate data using user inputs and program algorithm. This meant that you could create fake videos of people or fabricate information about them. Although AI was still early in development, it had already raised a great number of trust issues. If ethical standards and data security were not maintained, humans might no longer be able to believe in what they see.

Aside from the surrounding world, AI could also have a tremendous impact on humans in the future. It could affect humanity on both societal and personal levels. On a grand scale, AI might replace a substantial amount of jobs existing in the workforce today. It could also cause a type of ‘AI nationalism’, where the world’s superpowers scrambled to secure their technological leverage over their competitors, be it economically or militarily. This might lead to a complete transformation of geopolitics from what it was today. Humans could potentially face an existential threat, in which machines somehow became conscious and decided to eliminate humanity. On a more personal level, the relationship between humans and robots was becoming blurrier. People were feeling more comfortable talking to robots rather than real humans. Some of them even had decided to marry holograms or robotic avatars of their favourite characters.

Aside from the surrounding world, AI could also have a tremendous impact on humans in the future. It could affect humanity on both societal and personal levels. On a grand scale, AI might replace a substantial amount of jobs existing in the workforce today. It could also cause a type of ‘AI nationalism’, where the world’s superpowers scrambled to secure their technological leverage over their competitors, be it economically or militarily. This might lead to a complete transformation of geopolitics from what it was today. Humans could potentially face an existential threat, in which machines somehow became conscious and decided to eliminate humanity. On a more personal level, the relationship between humans and robots was becoming blurrier. People were feeling more comfortable talking to robots rather than real humans. Some of them even had decided to marry holograms or robotic avatars of their favourite characters.

Despite the current shift and uncertain future of AI, humans still had some advantages over robots and AI such as mobility. When humans interacted with the environment, they did not have to think about every little action like bending down and bringing arms forwards…, while robots had to take into account every little move that it took. Maybe it’s time we had a more symbiotic relationship with robots, in which we trained them where we are better and they trained us where they are better. AI could also exist in our lives in the future in the form of a body double, a digital version of ourselves. Algorithms already knew more about us than we knew ourselves so it’s only a matter of time until we could send these avatars to do the talking on our behalf, such as making a transaction with the bank or doing a check-up with a doctor. We could even borrow the avatar of another person to pick their brain should they be busy themselves.

Prof. Andrew Blake, Chairman of Samsung’s AI Research Centre in Cambridge, headed the second talk. He discussed robots that can really see, the principles behind their visions and challenges facing them in the next decade.

The first that was mentioned is the technology of motion capture, which had various medical uses and movie special effects. Special markers were placed on actors in front of a camera. Since the markers were easy to track, a computer could track the movements of the actors. This had gotten more and more sophisticated over the years, becoming increasingly automated and accurate. The second one was emotional intelligence, machines that could perceive and discern human emotions. From cameras that could efficiently detect faces, we had eye trackers that knew where you were looking at, which could be used to distinguish between a person who had schizophrenia and one who did not.

The first that was mentioned is the technology of motion capture, which had various medical uses and movie special effects. Special markers were placed on actors in front of a camera. Since the markers were easy to track, a computer could track the movements of the actors. This had gotten more and more sophisticated over the years, becoming increasingly automated and accurate. The second one was emotional intelligence, machines that could perceive and discern human emotions. From cameras that could efficiently detect faces, we had eye trackers that knew where you were looking at, which could be used to distinguish between a person who had schizophrenia and one who did not.

Combining these capabilities together, a new system was developed, which was able to record the movements of a person’s face and track its key points to classify expressions. The third type of machines that Prof. Blake explored was those used in medical image processing. They were developed especially for not only combining images of a patient’s x-ray together to form a more precise comparison but also recognising their organs. Another type of machine allowed surgeons to command the computer to do various tasks without using the keyboard and mouse. The last technology that he mentioned was autonomous vehicles. From driver assistance of the early 2000s to the imminent driverless vehicles of today, we are getting closer and closer to the future where a traffic system with extreme autonomy is possible.

From then, Prof. Blake went on to talk about three important principles of these seeing machines. But before that, he emphasised that seeing for a machine is a difficult task. Our human brains had such powerful parallel thinking capabilities that we could automatically recognise the main subject in a scene and filter out all the unnecessary details. We also possessed a high tolerance for visual ambiguity and were able to develop our own predictions of what the scene might be and revise them as more information come in. The first principle for vision was probabilistic mechanisms. Instead of doing pure arithmetic, computers needed to become a kind of ‘risks machines’. In the case of separating out the object in an image or video, the computer did not know what the object was but dealt with individual pixels and the relationships among them. Based on the idea of ‘coherence’, the computer could calculate the probabilities that two adjacent pixels were a part of the object or not altogether. This could be used for background removal or image stabilisation.

From then, Prof. Blake went on to talk about three important principles of these seeing machines. But before that, he emphasised that seeing for a machine is a difficult task. Our human brains had such powerful parallel thinking capabilities that we could automatically recognise the main subject in a scene and filter out all the unnecessary details. We also possessed a high tolerance for visual ambiguity and were able to develop our own predictions of what the scene might be and revise them as more information come in. The first principle for vision was probabilistic mechanisms. Instead of doing pure arithmetic, computers needed to become a kind of ‘risks machines’. In the case of separating out the object in an image or video, the computer did not know what the object was but dealt with individual pixels and the relationships among them. Based on the idea of ‘coherence’, the computer could calculate the probabilities that two adjacent pixels were a part of the object or not altogether. This could be used for background removal or image stabilisation.

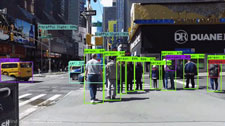

The second principle was that seeing machines were taught by feeding them data as conventional programming was too difficult. For example, machines were shown a series of correct images that they needed to recognise and another series of near misses. Given enough examples, they would be apt to reliably tell the images apart. The last principle Prof. Blake wanted to mention was ‘the rise of deep networks’. In a deep convolutional network, the image input went through a sequence of operations, often alternating between linear and nonlinear before reaching the output, the classification of the image. Deep neural networks had helped image classification efforts tremendously, going from almost 30% rate of error in 2010 to only around 3% in 2016, beating the human error rate of around 6% in 2015.

The second principle was that seeing machines were taught by feeding them data as conventional programming was too difficult. For example, machines were shown a series of correct images that they needed to recognise and another series of near misses. Given enough examples, they would be apt to reliably tell the images apart. The last principle Prof. Blake wanted to mention was ‘the rise of deep networks’. In a deep convolutional network, the image input went through a sequence of operations, often alternating between linear and nonlinear before reaching the output, the classification of the image. Deep neural networks had helped image classification efforts tremendously, going from almost 30% rate of error in 2010 to only around 3% in 2016, beating the human error rate of around 6% in 2015.

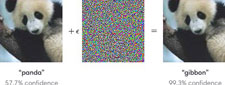

Lastly, he explored four challenges that seeing machines still face today. The first was adversarial attacks, in which a person who knew what the neural network’s classification process was, added a particular pattern of noise to a picture to cause the machines’ judgment to go wrong. This could even happen physically when the attacker attached papers on the object to replicate the pattern aforementioned on the physical level.

The second challenge was that of vision and language. Information of a pixel in an image was a continuous thing while the words in a language were discrete so it was somewhat difficult to relate them together. But some headway was made as scientists had been able to visualise language in a continuous space. The third was the notion that it took considerably more data for a neural network to learn a concept than a human did. While a human only needed one or two examples to know what an object looked like, a neural network required hundreds or thousands of images of the object before being able to discern it correctly. The last challenge was designing an AI for safety-critical systems. In order for AI to be used commercially, they needed to reach a certain level of safeness, especially in autonomous driving where a mistake in calculation could lead to the passengers’ severe injuries or even death.

The second challenge was that of vision and language. Information of a pixel in an image was a continuous thing while the words in a language were discrete so it was somewhat difficult to relate them together. But some headway was made as scientists had been able to visualise language in a continuous space. The third was the notion that it took considerably more data for a neural network to learn a concept than a human did. While a human only needed one or two examples to know what an object looked like, a neural network required hundreds or thousands of images of the object before being able to discern it correctly. The last challenge was designing an AI for safety-critical systems. In order for AI to be used commercially, they needed to reach a certain level of safeness, especially in autonomous driving where a mistake in calculation could lead to the passengers’ severe injuries or even death.

Developments in computer science and digital technologies have enabled governments and private companies to monitor our behaviour, collect and analyse personal data on a large scale and distributing them. All of these advances could pose a huge threat to society if we don’t start preparing now since they cause rises in moral and ethical issues. Problems such as data ownership, privacy and rights of robots need to be figured out soon so that we are not empty-handed when the technology comes. We have to find a compromise between having our own privacy and being able to use these wonderful tools. We, computer scientists, will undoubtedly write these algorithms of the future. Therefore, we bear full responsibility for all the code that we deploy. We also need to ensure that these algorithms do not infringe on laws or rights of any kind.

In my opinion, a future with robots and AI is better than one without them. Robots could enable us to live our lives to the fullest while they handle the trivial ordeals that we have to go through in our everyday lives. All the fear and scepticism of AI comes from having little understanding of them. We may think that AI knows everything, but they do not. In fact, their thought process is very different from ours. Individually, AI is only able to handle a certain task coded in their algorithms. They do not have any abilities like Skynet at all. Once these misconceptions about AI have been cleared and preparations are done, one day we will be ready to welcome robots and AI into our lives with open arms.

From these two talks, we can see that we are already at the very beginning of a new era where humans and robots with artificial intelligence work closely together. I think leaders who will enable these technologies to come into our lives will be no one other than us, the young generation. Thus, we need to start preparing now by studying hard and getting a good education so that when these technologies no longer only exist in the lab, we are able to fully integrate them into our daily lives.

From these two talks, we can see that we are already at the very beginning of a new era where humans and robots with artificial intelligence work closely together. I think leaders who will enable these technologies to come into our lives will be no one other than us, the young generation. Thus, we need to start preparing now by studying hard and getting a good education so that when these technologies no longer only exist in the lab, we are able to fully integrate them into our daily lives.

Lectures like these are a great way for us to enrich our knowledge of the world in general. You could also discover new things that you probably have not been taught in school yet, as I did with our prospective relationship with robots in Sophie’s talk. There is also the chance of meeting acclaimed authors, professors and scientists and asking them questions in person that you would not be able to otherwise. Therefore, I highly recommend that you choose and attend a few lectures that are of interest to you.

You can watch these two talks or other Darwin College Lecture Series talks here: https://lectures.dar.cam.ac.uk/